History is riddled with examples of machine eradicating the jobs of man. From mining to car manufacturing and call centre operation, the robot – with its unwavering efficiency and tedious accuracy – is now favoured above the humble homosapien in all but a few industries. Prostitution just sprung (worryingly) to mind, but I’m not even sure about that anymore to be honest. Ironically when writing this paragraph MS Word 2007 doesn’t recognise the word “homosapien”, so either it’s not as accurate as I first thought, or has just decided that word is now obsolete.

Anyway, the point of this post is that SEO – much like many other emerging industries – will soon succumb to the mighty power of the machine and many of our jobs (yes that means yours as well) will be at risk. Many of the signs of automation are already creeping in. Heck, we are all slaves right now to the biggest automated machine in the world – Google. But more specifically I mean automation within our own spheres, the ones that circumnavigate the search engines and have a give and take relationship with them. I argue that soon it will simply be machine against machine, and we will be mere bystanders, with spanners in our hands and vacant expressions on our faces, wondering if enough time has passed to get away with putting the kettle on again.

Automate What?…

Research and Monitoring

Monitoring your search marketing campaigns is becoming an increasingly automated affair. Maybe you still manually Google your rankings, but many use simple time-saving tools such as SEOSerp to quickly check their positions or software such as Web CEO or CuteRank to check multiple rankings. It’s now very easy to schedule rank checks as frequently as you like, so your tool of choice just spits out a report of how you’re getting on. But “hang on a moment” you say, “you still need a human to interpret the traffic and conversions you’re getting from those rankings right?” No you don’t actually; you can plug WordStream for example into your GA account and automatically monitor the conversion rates for individual keywords and get alerts for underperforming or high performing terms. It even lets you semantically group keywords together so you can highlight the best performing groups and target these further.

But it gets better…

Mark Cook of Further recently talked at an SEO event in Brighton, England about a system they use to automatically capture tonnes of data about their rankings and CTRs. Tired of relying on Google Keyword Tool for predicted traffic and the good old leaked AOL data on CTR, Further decided to build their own engine that systematically records Google rankings for their keywords and correlates these with their traffic stats. This not only gives them a great insight into relative CTR for different positions in the SERPS (including Google Images, Places etc), but also allows them to predict traffic volumes for keywords they don’t necessarily rank for, by carefully analysing (could be automated) the increasing amounts of data they have already captured.

Link Building

Most ‘automated link building’ tools and services are a pile of rubbish admittedly, but over the years I have certainly seen a shift in this direction. The sheer volume of such products on the market is indication alone that our desire for ‘hand-free’ link building is increasing. Services such as Linxboss and Xrumer claim to meet this demand, and if you think about it, article spinning is very much an exploitation of automated software and server work as well. I won’t pass judgment on the aforementioned link building methods, but what I will say however is that by and large the automated options available today are far superior than that of a yesteryear (I’m thinking blog commenting tools, directory submission software etc.).

I would happily predict that the advancement in automated link building robots will continue to accelerate, and rightly or wrongly they will become the method of choice for most SEOs…some would argue they already are.

Onsite Optimisation

The most exciting area of automated SEO in my opinion is onsite optimisation. Classically onsite optimisation is done ‘by hand’, based on industry recommendations, anecdotal evidence, or at best -trial and error. However, handing something as important and as sensitive as onsite optimisation over to human interpretation is a hideously inefficient idea, not to mention that a site might need 100k pages all needing to be optimised differently. Hand the job to a computer however and you can have that kettle on again in no time.

I first stumbled across the idea of automated onsite optimisation when reading this headache-inducing post over at the brilliant Blue Hat SEO blog. Now I don’t know if Eli called his site Blue Hat SEO because it’s so off beat with traditional white, black, grey hat etc, or because of the colourful language he uses, but he’s sure got some great concepts:

What he proposes is setting up pages that automatically tweak themselves here and there, adding and removing keywords in various areas, changing keyword ordering and pluralisation, editing meta descriptions and internal links etc. This is all closely correlated with rank checking for the individual pages and when a change causes an increase in position for the desired keyword(s), this change is given a stronger weighting value and the next element of the page is tweaked to achieve another boost. Think of it like fully automated A-B testing. Now consider if all the pages could communicate with each other and tell each one another which changes caused the biggest increases (or drops) in ranking. You could essentially build a site than learns and constantly refines itself to become the most SEO-friendly possible.

Of course there are issues with this concept, and of course it would require a great deal of human coding to begin with, but the key takeaway is that the idea is very valid and very possible.

Reporting

I’m going to keep this bit concise as I’m very conscious of the fact this post is getting quite long (a robot would have realised this way back).

Having worked at a couple of SEO agencies myself I can testify to the fact that reporting to clients can take up frustrating amounts of time. Documenting work completed, rankings, traffic, links etc all take time, and largely speaking can all be automated.

Tools that I have already mentioned can automatically generate ranking and traffic reports, and furthermore can be plugged into a centralised custom or off-the-shelf reporting system. Link monitoring and data sharing with clients can be handled by tools such as BuzzStream and there are numerous platforms on the market that can allow clients to login and quickly update themselves with work being completed for their site.

Conclusion

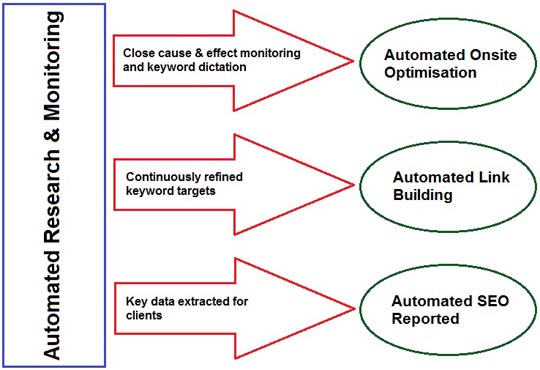

These various areas of SEO automation each have the potential to be very powerful and efficient. Indeed, I know of many who are playing around with similar concepts, and some who are streaking ahead with fully working models. Consider if you will though the potential of linking all of these automated elements together. A bit like this:

A system could be built that eventually requires very little human input, and thus does away with all human error, guesswork and lethargy. Constantly refining itself and automatically adapting to minute changes in the search landscape… until that is, someone spills coffee on the server.

Check out the SEO Tools guide at Search Engine Journal.